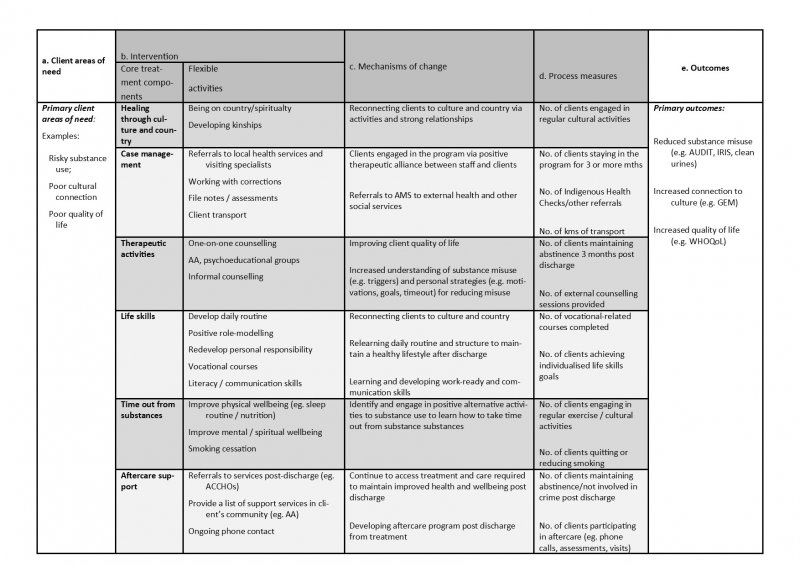

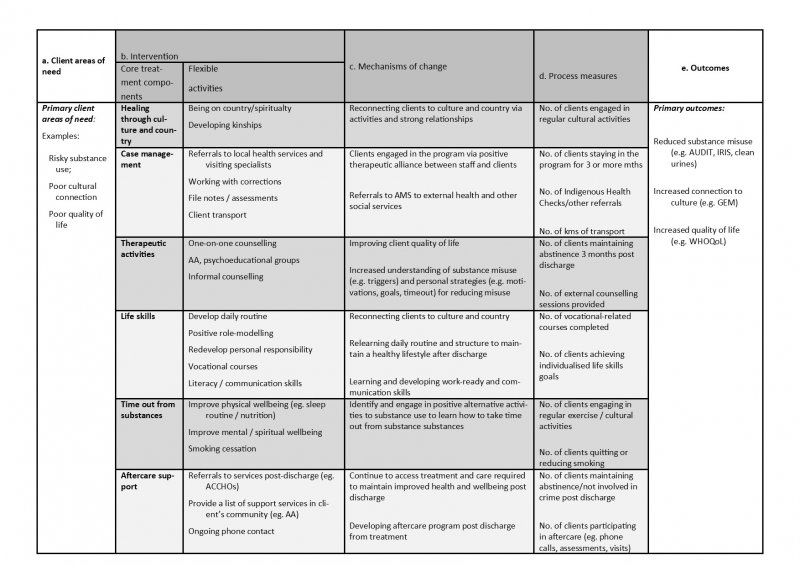

For full program logic see Munro et al (2017): https://healthandjusticejournal.biomedcentral.com/articles/10.1186/s40352-017-0056-z

The impact of research that actively engages with communities, non-government organisations and clinical services can be fundamentally influenced by the engagement processes that researchers devise and implement. The corollary of this proposition is that establishing a pragmatic, or even evidence-based, process of change is likely to improve outcomes for communities and clients of services. So is it feasible to establish an evidence-based process for engaging communities and services in research?

One useful framework for guiding the process of engagement is program logics. While program logics are not new1,2, they can precisely identify the goals of the collaboration, ensure clarity about the proposed activities, articulate why those activities are likely to be effective in achieving the goals of the collaboration, and ensure the devised activities are strongly aligned with the goals, the outcomes, and measures of both processes and outcomes. In other words, they can be used to address one of the key weaknesses noted by Stockings et al. in their systematic review of community-based interventions summarised in this edition of Connections, namely, that “Efforts should be made to standardize outcomes and measures,…”. In terms of engaging communities and services in research, we have trialled utilising program logics in two different ways.

|

What is a program logic?

|

First, we have used them to define prevention or treatment programs in research conducted in partnership with Aboriginal communities (a forthcoming paper led by Dr Mieke Snijder), with an NGO service for high-risk young people3 (a paper led by Alice Knight) and with a clinical service, namely, Aboriginal drug and alcohol residential rehabilitation services in NSW4 (a paper led by Dr Alice Munro). One innovation we have applied to standard program logics is to stipulate the need to articulate the proposed mechanism of, or rationale for, change. That is, articulating why a program or defined activities are likely to achieve the proposed outcomes. Another innovation we have applied to standard program logics is to define programs in terms of two separate concepts: core components that are standardised across similar services or communities; and flexible activities that operationalise, or give effect to, those core components. This innovation of both standardised and flexible program components is aimed at solving the well-established, but as yet relatively intractable, problem frequently noted in the complex intervention literature between the need for standardisation (to provide adequate comparability across programs delivered by different services in different circumstances) and the need for sufficient flexibility to allow tailoring to the resources and circumstances of different settings5,6,7.

Second, we have used program logics to try to delineate and specify the process of change by which clinical or program innovations might be implemented into routine practice. We have developed our process of change models while partnering with drug and alcohol and mental health clinical services in the Mid-North Coast Local Health District. Specifically, we have designed a process of moving from independent delivery of services for clients with both drug and alcohol and mental health disorders to a model of integrated care. Essentially, this process has required both drug and alcohol and mental health clinicians to re-design their systems of care to ensure required services are brought to clients rather than expecting clients to attend separate services. Understanding this change process, and translating it into a pragmatic and replicable framework to guide its use elsewhere, is the subject of two forthcoming papers (led by Cath Foley, who will present this work at NDARC’s Annual Symposium on October 8th, 2018).

Despite the promise of pragmatic program logics for more effectively facilitating partnerships for research between academics, communities and services, they could only be promoted and further developed if they enact three critical principles.

The first critical principle is that they have to manifest evidence-based practice. According to the original definition of EBP, this means that program logics must be able to integrate the best-available external evidence with the expertise of individual service providers or community-based key stakeholders8. Typically, the best available external evidence is distilled from findings of systematic reviews or meta-analysis, such as the review of community-based interventions published by Stockings et al. in this edition of Connections. Program logics can be used to elicit the expert knowledge of practitioners and community members, both in terms of identifying additional standardised core components of a program (such as cultural-related components that may be identified in Indigenous contexts) and operationalising those core components to their own specific circumstances.

The second critical principle is that program logics must be co-created: they are unlikely to be effective if they do not emerge from genuine and respectful partnerships that seek to utilise the knowledge and skills of all of those participating in the partnership. Clients of services, clinicians and service providers, community members, researchers and sometimes even funders of research have a legitimate contribution to make towards achieving co-designed outcomes. Indeed, partnership research is so inherently co-created that we explored the concept of co-creation and found it to be unequivocally under-developed. We currently have a paper under editorial review (led by PhD candidate Tania Pearce) that aims to establish a definition for what constitutes the co-creation of new knowledge.

The third critical principle is that the programs or clinical innovations that are co-designed and implemented using program logics, must be evaluated using rigorous evaluation designs and high-quality measures. This requires more than pre/post evaluations in single settings (a requirement that underscores the importance of program logics promoting definitions of services and community-based programs that are able to be standardised across services or communities, as well as being best-evidence practice and tailored to different circumstances) but does not always require full-scale cluster randomised controlled trials (RCTs). We have previously detailed the limitations of RCTs9, and rigorous but pragmatic alternatives, such as multiple baseline designs10.

Professor Donald Berwick is one of the key figures in the process of change literature, at least as it has been applied to the field of health and medicine. He is fond of quipping something to the effect that every system is perfectly designed to produce the outcomes that it produces. The challenge to which this alludes is that improved client or patient or community outcomes will not simply happen because of new knowledge generated in isolation from the systems that allow services and communities to function: those who work in those systems or communities must be integral to the design, creation and application of new knowledge. One way to facilitate this process could be greater utilisation of pragmatic and evidence-based program logics.

Acknowledgements: while the views expressed in this opinion piece are my own, I wish to acknowledge the intellectual and pragmatic contributions of the large number of my collaborators in the research projects relevant to this opinion piece, including service providers, community members, higher degree research students and academic colleagues. Their insights and willingness to engage in research have strongly shaped this opinion piece.

References

For full program logic see Munro et al (2017): https://healthandjusticejournal.biomedcentral.com/articles/10.1186/s40352-017-0056-z